Virtual Memory

#lecture note based on 15-213 Introduction to Computer Systems

Notice: lots of processes can run at the same time, yet their memory addresses don’t overlap…

Goal: many programmes run in RAM, each of them have isolated stack, heap, and stuff

Notice: if we fork a process and and make both processes print heap/stack/data/text address, they print the same addresses, but one process can write without changing data on the other process…

Operating system somehow makes sure they aren’t using the same memory!

H2 Virtual vs Physical Memory

Address in a programme are usually not physical addresses.

- Simple system: cpu request with physical address

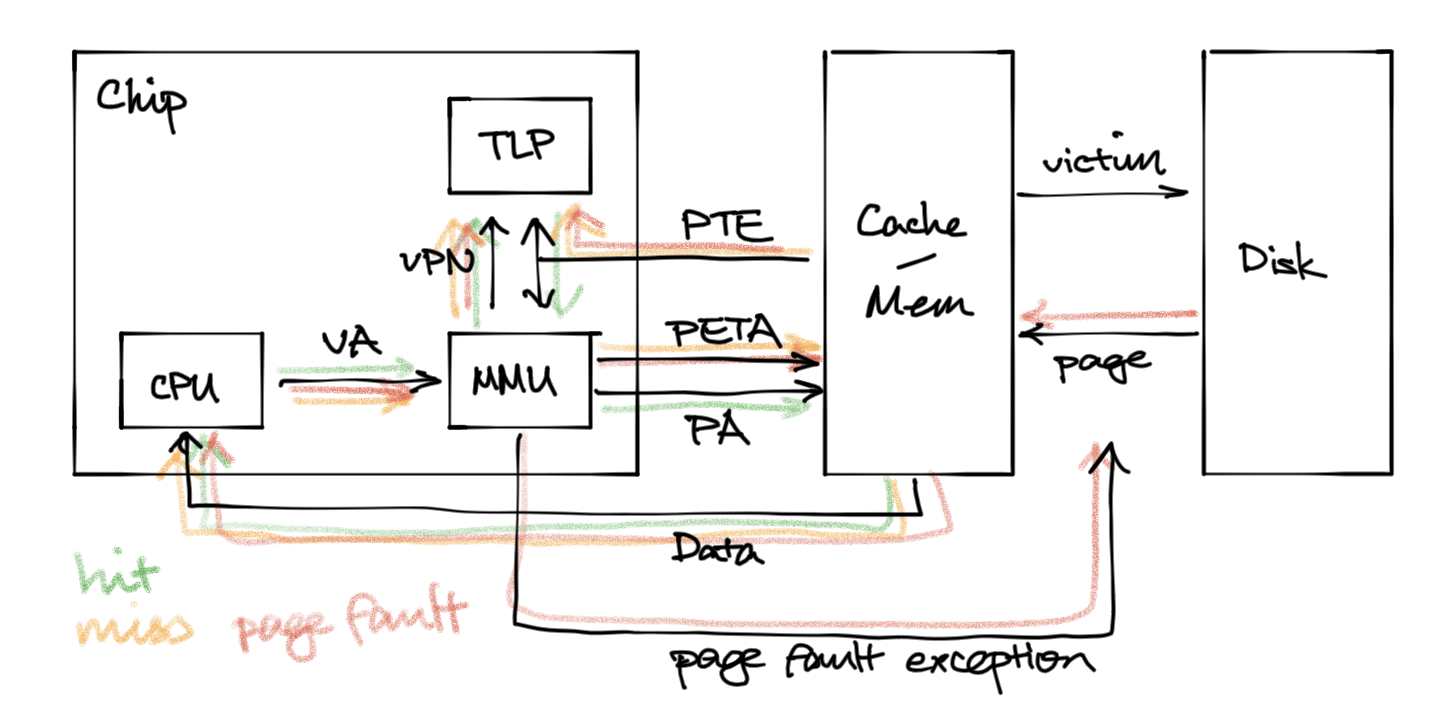

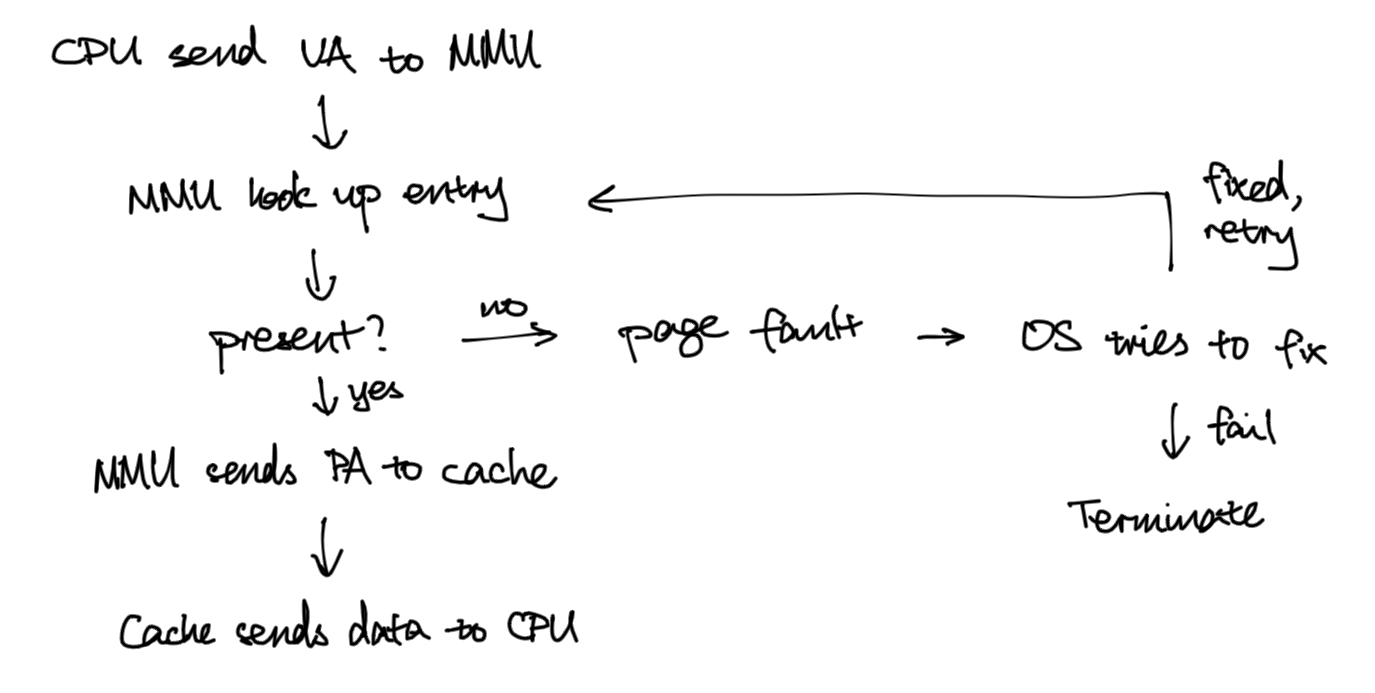

- Virtual addressing system: CPU sends virtual memory address, MMU translates that to physical address before sending request to main memory. So:

- all processes can just use virtual memory addresses

- all processes can think they have 64 bits of addressing space

- operating system decides what physical address correspond with what virtual address

- same virtual address in different processes can map to different physical address

- … or share the same physical address (if read-only, library code, etc.)

- different processes cannot overwrite unshared physical memory other processes are using

- launching new process doesn’t require finding different address for heap/stack/etc

- simpler, more efficient memory management

- simplifies linking - addresses for different things in a programme are usually similar

- simplifies loading - copying virtual pages, be lazy in terms of copying actual physical pages

- can act as a cache as some data mapped to DRAM, some to disk

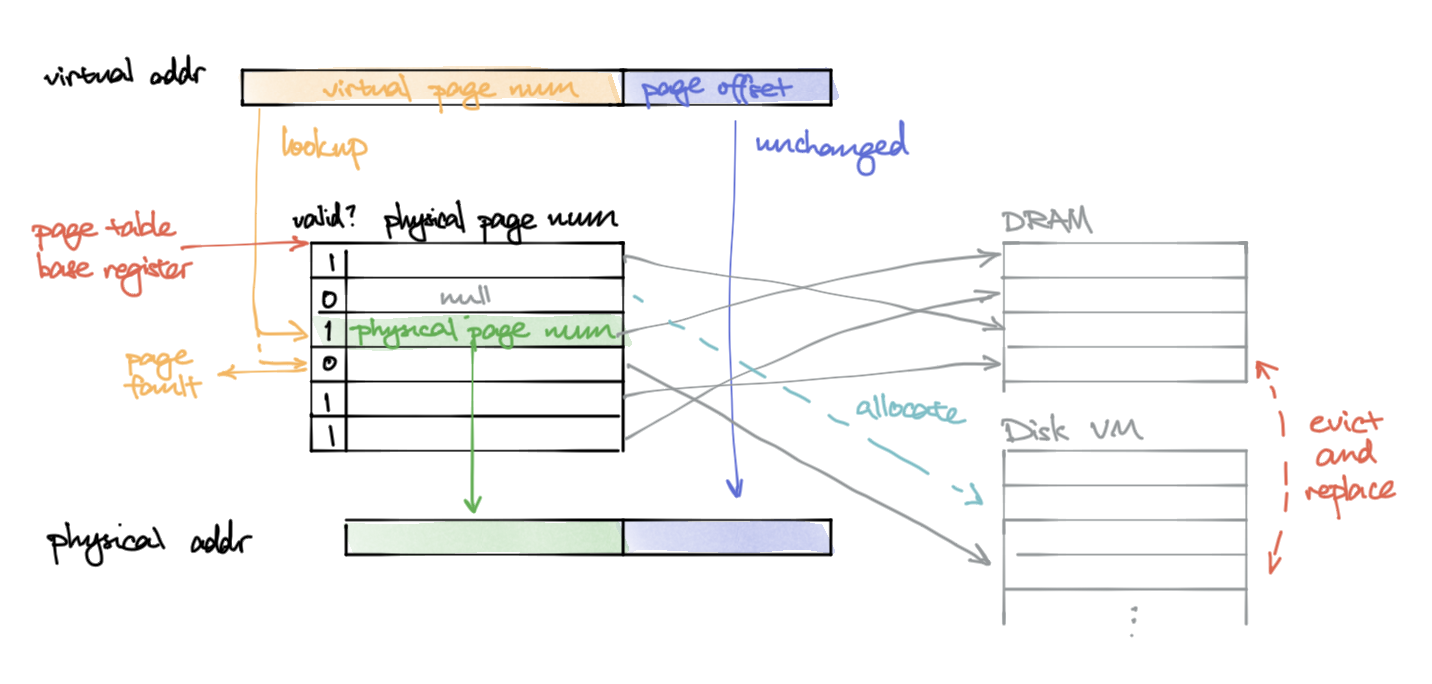

H3 Address spaces

- Contiguous non-negative integer

- Virtual address space with size , addresses

- Physical address space with size addresses

- Mapping function

- virtual addresses could get mapped to memory or disk

H3 Components

- Memory management unit (MMU)

- Sits between CPU and cache (cache access still requires physical address)

- Page table entry (PTE) - stores an individual mapping

- valid bit

- flag bits, like permissions

- sup - supervisor

- read

- write

- exec

- physical address / disk address to map to

- Page table - array of PTEs

- Hardware specific, part of ISA, and updated by kernel.

CR3points to start of page table for current running process

- Page - unit of data mapped by a single entry

- Page size , usually 4 KB, some use 4 MB

- Translation Lookaside Buffer (TLB)

- VA virtual address

- TLBI TLB index

- TLBT TLB tag

- VPO virtual page offset

- VPN virtual page num

- PA physical address

- PAO physical address offset

- PPN Physical page number

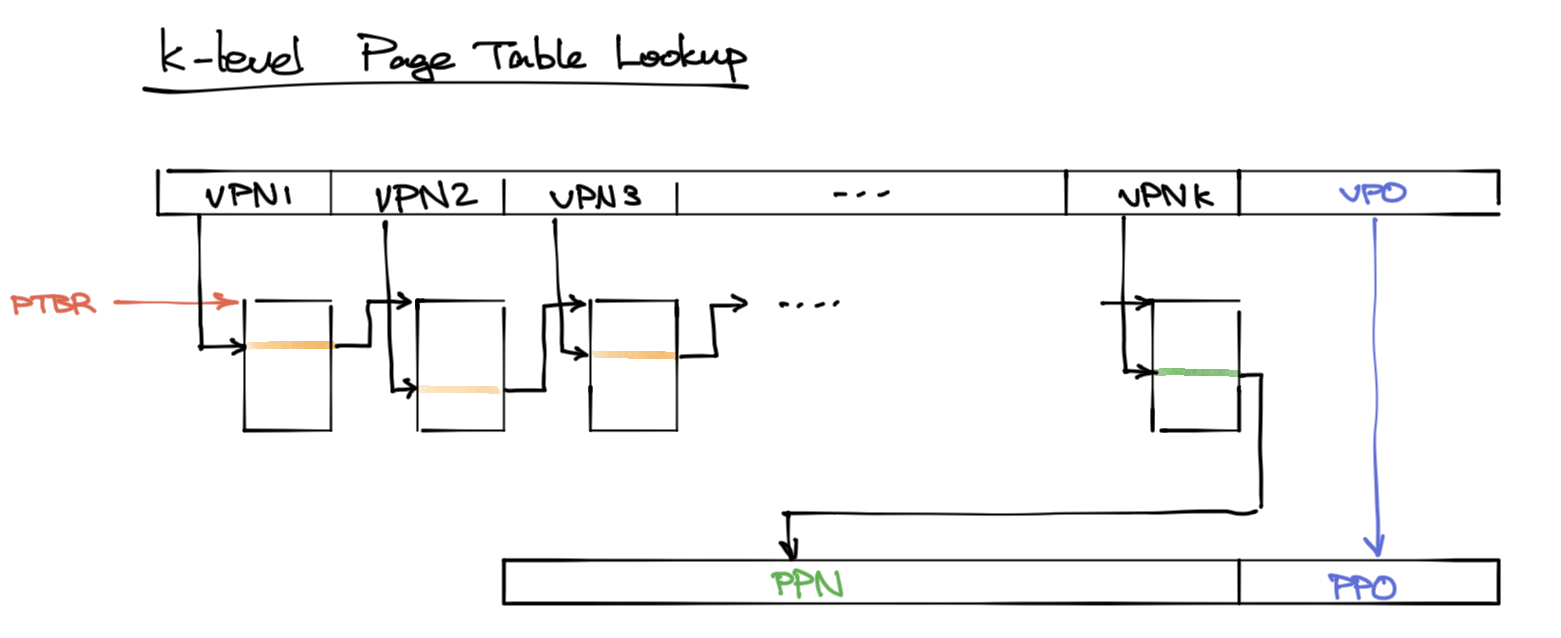

- Page table base register (PTBR)

H4 Single level page table

A simple implementation, but requires lots of space, many of which are allocated but not used for useful mapping.

It can just be an array of PTEs

Problem: this takes can take sth like 512 GB of memory… won’t even fit.

H4 Multilevel page table

Idea: split up VPN, use different portion to look up in different level

This way, we can simply have NULL and eliminate all the subsequent levels.

Trouble: We can cache miss at every level, so maybe good caching of the mappings would be nice.

H3 Virtual memory like cache

- Like a fully associative cache, but mapping function much more complicated.

- Hardware doesn’t enforce replacement policy. Operating system needs to do this.

- Thrashing - when really needing more physical memory, so computer keeps writing reading disk

- Demand paging - waiting for page miss to copy data to DRAM

H3 Translation Lookaside Buffer (TLB)

To speed up lookups, make use of locality again!. We usually hit something in the cache, so no need to go through all these translations.

Technically, putting PTEs in L1 works, but:

- other data may evict PTEs

- L1 still has delay

Instead, using a dedicated TLB:

- Hardware in the MMU

- Caches mapping to save time

- set-associative (sth like 500 cached mappings)

- Misses are usually rarer than cache misses

H4 TLB accesses

Similar to cache access, mappings can be broken down into:

- VPN address:

| TLBT (tag) | TLBI (index) | VPO |- Where TLBI is used to select set

- Each line:

| v | tag | PTE |

H2 Memory-mapped Files

- Used to be that all pages of physical RAM is back by some page on disk

- Whatever in memory is cache of what’s on disk

- Modern: maybe less necessary because memory is cheaper, but still true for mapping to swap and file on disk

- (Can explicitly request two processes to share memory)

- Forking process - copy-on-write sharing:

- duplicate the page tables (so they share same physical memory)

- mark everything read only

- copy page upon write fault