Website Update - Launching the Third Generation

#post #front-end #python #automation #dev-log

H2 Preamble aka ramble

This post has been delayed for almost a month… things are just not quite working out the way they should. Very well, now that most issues are addressed, it is safe to say that we are saying goodbye to the second generation of our website outlined here.

Working with Jekyll has been a fun experience, but anyhow we decided to experiment with Hugo. There is already a Hugo theme for publishing Obsidian vaults called Quartz. Quartz comes with a clean front-end design, blazingly fast search, and snappy graph view. Unfortunately, it didn’t quite work out of the box—perhaps there is just something about custom markdown formats that don’t make things work. There never seems to be a standard for markdown anyway…

H2 Quartz-Plus

Because Quartz didn’t do some of the things we wanted, we ended small things one after another… eventually ending up with a variant we call Quartz-Plus.

H3 Addressed problems with Quartz

- Preprocessing script for better Obsidian vault integration and publishing control (use “publish: true” in yaml frontmatter to whitelist files)

- Copying attachments from Obsidian to the right place (thereby supporting wikilink without leading folder path)

- Uses the first h1 tag in markdown as title in frontmatter so that Hugo is happy (otherwise use the filename as title)

- Support wikilink media embed like Obsidian does, including video, pdf, and audio embed

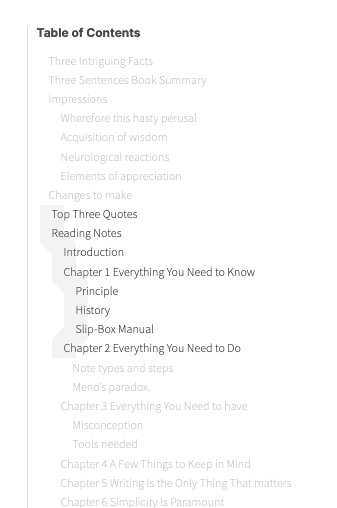

- Fancy table of content on the side (with chunker script that puts each heading in its isolated div)

- Pretty calendar embed using FullCalendar

- Custom theme

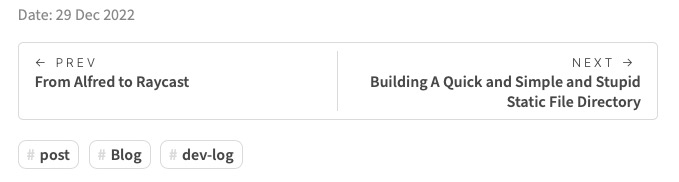

- Blog post navigation control

- Color scheme

- Miscellaneous CSS tweaks

- Layout system - supports specifying layout in yaml metadata

- Postprocessing script to clean up graph so that non-existent links or nodes don’t show up

- GitHub workflow to build a website from vault repo and deploy to branch on the same or different repo (supports private repo)

- Netlify deployment support (by supporting case-insensitive URLs)

- Walk through markdown files and add inline tags to yaml metadata

- Make commands to simplify workflow

H3 Some added features showcase

H6 Table of content

H6 Blog post navigation

H6 Minimap (work in progress)

H3 Roadmap

- Obsidian wikilink style Excalidraw support using their CLI

- Fix orphan not showing up in graph (only partially fixed currently)

- Option to display footnotes as sidenotes

- Minimap

- Bugs

- https://github.com/jackyzha0/quartz/issues/260

- preprocess script picking up tags from code fences

H2 The new publishing pipeline

It was only after (almost) completing the project that we realise how much time was poured into getting our markdown files to render properly on a Hugo site. The solution was cumbersome, but it worked… To be honest we would have started with another approach if we were to do this again.

H3 Preprocessing

Basically, preprocessing involves walking through an entire Obsidian vault and copying relevant files to Hugo’s content or static directory while modifying some markdown format.

# inspired by and based on https://github.com/sspaeti/second-brain-public

import os, sys, argparse, time

from datetime import datetime

import shutil

from pathlib import Path

import re

import glob

import frontmatter

import asyncio

# - loop through directly recursively through the directory and find all the .md files

# - then loop through the files and find all the published notes

# - if file found, move it to public folder

# - copy first h1 to frontmatter as title

# - add inline tags to metadata

regexp_md_attachment = r"\[\[((?:.+)\.(?:\S+))(?:\|\d+)?\]\]" # attachments (maybe)

h1_regex = r"(?m)^# (.*)" # finds entire h1 line

# = ACTIONS =

def find_attachment_and_copy(file_name: str, root_path: str, target_attachment_path: str):

files = glob.glob(root_path + "/**/" + file_name, recursive=True)

# print(files)

for file in files:

shutil.copy(file, os.path.join(target_attachment_path, file_name))

# print(f"attachment `{file}` copied to {target_attachment_path}")

# read a md file and add inline tags to frontmatter

def add_inline_tag_to_yaml(md_file_path):

with open(md_file_path, "r") as f_r:

md_frontmatter = frontmatter.load(f_r)

with open(md_file_path, "r") as f_r:

md_text = f_r.read()

if not "tags" in md_frontmatter.keys():

tags_field = []

else:

tags_field = md_frontmatter["tags"]

if type(tags_field) != "list":

tags_field = [tags_field]

inline_tags = get_inline_tags(md_text)

tags_field = list(set(tags_field + inline_tags))

md_frontmatter["tags"] = tags_field

with open(md_file_path, "wb") as f_w:

frontmatter.dump(md_frontmatter, f_w)

return

def walk_through_markdown_for_attachments(md_file_path: str, root_path: str, target_attachment_path: str):

# BUG: links like something 3.0 are identified as file

# search for attachments in markdown file

file_content = open(md_file_path, "r").read()

attachments = re.findall(regexp_md_attachment, file_content)

if attachments:

print(f"ATTACHMENT SEARCH RESULT for {md_file_path}", attachments)

for attachment in attachments:

attachment = re.sub(r"\|\d+", "", attachment)

# print(attachment)

if attachment:

# TODO excalidraw not handelled correctly. Maybe try https://github.com/tommywalkie/excalidraw-cli to turn excalidraw into svg first.

# find attachment recursively in folder and copy to public attachment folder

find_attachment_and_copy(os.path.basename(attachment), root_path, target_attachment_path)

def find_and_copy_published(source_path: str, copy_to_path: str):

"""

- find `published: true` frontmatter in private folder and move it to public folder.

- copies h1 title (`# ..`) into frontmatter as YAML title if no title exists

"""

for root, dirs, files in os.walk(source_path):

for file in files:

# print(file)

if file.endswith(".md"):

file_path = os.path.join(root, file)

# use published frontmatter instead

with open(file_path, "r") as f:

fmt = frontmatter.load(f)

# print(fmt.metadata)

if ("published" in fmt.metadata.keys()) and (fmt.metadata["published"] == True):

# destination should be lower-case (spaces will be handled by hugo with `urlize`)

# file_name_lower = os.path.basename(file_path).lower() # HACK unnecessary?

# print(f"publish: {file_path}")

# copy that file to the publish notes directory

shutil.copy(

# file_path, os.path.join(copy_to_path, file_name_lower) # HACK unnecessary?

file_path, os.path.join(copy_to_path, file)

)

def add_h1_or_filename_as_title_frontmatter(md_file_path: str):

with open(md_file_path, "r") as f_r:

md_frontmatter = frontmatter.load(f_r)

if not "title" in md_frontmatter.keys():

# read line by line and search for h1

with open(md_file_path, "r") as f:

lines = f.readlines()

for i, line in enumerate(lines):

result = re.findall(h1_regex, line)

if result:

# print(f"found h1 in {md_file_path} line: {line}")

# put in frontmatter and delete h1 line if any

# add h1 header to `title` to frontmatter

md_frontmatter["title"] = result[0]

print(md_frontmatter["title"])

# overwrite current file with added title

dump_frontmatter(md_file_path, md_frontmatter)

# then delete title

with open(md_file_path, "r") as f:

modified_lines = f.readlines()

for j, line in enumerate(modified_lines):

res = re.findall(h1_regex, line)

if res:

# print(f"found h1 in {md_file_path} line: {line}")

delete_line(md_file_path, j)

break

break

else:

# none found, use filename

md_frontmatter["title"] = Path(md_file_path).stem

# overwrite current file with added title

with open(md_file_path, "wb") as f:

frontmatter.dump(md_frontmatter, f)

# = HELPERS =

def get_inline_tags(md_text):

# modified from https://github.com/obsidian-html/obsidian-html/blob/b9f5869cd9453db7909174bb004b5d309702c545/obsidianhtml/parser/MarkdownPage.py

return [x[1:].replace('.','') for x in re.findall("(?<!\S)#[\w/\-]*[a-zA-Z\-_/][\w/\-]*", md_text)]

def dump_frontmatter(md_file_path, md_frontmatter):

with open(md_file_path, "wb") as f:

frontmatter.dump(md_frontmatter, f)

def delete_line(file_path: str, line_to_delete: int):

# print(f'attempting to delete line {line_to_delete} of file {file_path}')

f = open(file_path, 'r')

lines = f.readlines()

f.close()

lines = lines[:line_to_delete-1] + lines[line_to_delete+1:]

f = open(file_path, 'w')

f.writelines(lines)

f.close()

return

# = RUN =

if __name__ == "__main__":

# set variables

parser = argparse.ArgumentParser()

parser.add_argument('--source_path', required = True) # obsidian vault

parser.add_argument('--target_path', required = True) # whereever hugo looks for content

parser.add_argument('--target_attachment_path', required = True) # whereever hugo looks for attachments

args = parser.parse_args()

find_and_copy_published(args.source_path, args.target_path)

# loop through md in the published content dir

for root, dirs, files in os.walk(args.target_path):

for file in files:

if file.endswith(".md"):

md_file_path = os.path.join(root, file)

print('proc', md_file_path)

# copy referenced attachments

walk_through_markdown_for_attachments(md_file_path, args.source_path, args.target_attachment_path)

# add h1 as title frontmatter if none exists

add_h1_or_filename_as_title_frontmatter(md_file_path)

# add inline tags as frontmatter

add_inline_tag_to_yaml(md_file_path)

H3 Postprocessing

Another python script that tries to fix the link index generated by obsidian-hugo, which is part of Quartz.

"""

gets rid of dead links indexed by Obsidian-Hugo. Definitely not the most efficient thing to do, but hopefully it gets the job done.

the index file usually at "assets/indices/linkIndex.json"

Basic idea:

- read linkIndex.json

- loop through each index entry

- loop through each links entry

- loop through each node

- convert character encoding

- get rid of link that don't correspond to file in "content"

- loop through each backlinks entry

- loop through each node

- convert character encoding

- get rid of link that don't correspond to file in "content"

- loop through each link entry

- convert character encoding

- get rid of link that don't correspond to file in "content"

Also, Obsidian-Hugo seems to mistake internal block or section references as references to root, so all links target at "/" will get removed

Fix missing orphans by adding self link

"""

import json

import urllib.parse

import os

import re

INDEX_FILE = "./assets/indices/linkIndex.json"

CONTENT_FOLDER = "./content"

def decode_url_encoding(s) -> str:

return urllib.parse.unquote(s)

def load_json(path) -> dict:

f = open(path)

data = json.load(f)

f.close()

return data

def strip_name(s) -> str:

# gets rid of symbols, spaces, etc.

return (s.replace(".md", "")

.replace("?", "")

.replace("&", "")

.replace("!", "")

.replace("/", "")

.replace(" ", "")

.replace("-", "")

.replace("/", "")

.replace("\\", "")

.replace("%", "")

.replace(":", "")

.replace("(", "")

.replace(")", "")

.replace("|", "")

.replace('"', "")

.replace("'", "")

.replace(".", "")

.replace(",", "")

.replace(";", "")

)

def md_file_existence_heuristic(existing_files_set, encoded_url) -> bool:

return strip_name(encoded_url) in existing_files_set

data = load_json(INDEX_FILE)

existing_files = set([strip_name(file) for file in os.listdir(CONTENT_FOLDER)])

links_index: dict = data["index"]["links"]

backlinks_index: dict = data["index"]["backlinks"]

links_list: list = data["links"]

processed_data = {"index": {

"links": {},

"backlinks": {},

},

"links": [],

}

# = Remove false links =

for key in links_index.keys():

if md_file_existence_heuristic(existing_files, key):

processed_data["index"]["links"][key] = []

else:

# print(f'removing {key}')

continue

for i, entry in enumerate(links_index[key]):

if md_file_existence_heuristic(existing_files, entry["target"]):

processed_data["index"]["links"][key] += [entry]

else:

# print(f'removing {entry}')

continue

for key in backlinks_index.keys():

if md_file_existence_heuristic(existing_files, key):

processed_data["index"]["backlinks"][key] = []

else:

# print(f'removing {key}')

continue

for i, entry in enumerate(backlinks_index[key]):

if md_file_existence_heuristic(existing_files, entry["target"]):

processed_data["index"]["backlinks"][key] += [entry]

else:

# print(f'removing {entry}')

continue

for i, entry in enumerate(links_list):

if md_file_existence_heuristic(existing_files, entry["target"]) and ((entry["source"] == "/") or md_file_existence_heuristic(existing_files, entry["source"])):

processed_data["links"] += [entry]

else:

# print(f'removing {entry}')

continue

# Print deletion summary

print(f'POSTPROCESS: removed {len(data["index"]["links"]) - len(processed_data["index"]["links"])} false outbound links from index of {len(data["index"]["links"])} links')

print(f'POSTPROCESS: removed {len(data["index"]["backlinks"]) - len(processed_data["index"]["backlinks"])} false backlinks from index of {len(data["index"]["backlinks"])} links')

print(f'POSTPROCESS: removed {len(data["links"]) - len(processed_data["links"])} false links of {len(data["links"])} links')

# = fix orphans =

linked_nodes = set([entry["source"] for entry in processed_data["links"]]).union(set([entry["target"] for entry in processed_data["links"]]))

orphans_added = 0

for key in links_index.keys():

if key not in linked_nodes:

processed_data["links"] += [{

"source": key,

"target": key,

"text": key

}]

orphans_added += 1

# print(f"add self link for {key}")

print(f'POSTPROCESS: added {orphans_added} orphans to the graph')

# BUG doen't work if orphan is orphan in Obsidian. i.e. only work for those orphaned because of false link removal

# Writing to sample.json

json_object = json.dumps(processed_data, indent=2)

with open("./assets/indices/linkIndex.json", "w") as outfile:

outfile.write(json_object)

H3 Hugo… with more text processing

Right, we have to do extra work using Go in the Hugo template, just like what we did last time with Ruby for Jekyll. For example, this gets wikilink to display correctly. It turns out Go doesn’t support some [[Regular expression|regex]] features… which is awful.

{{/* Embeds */}}

<!-- image with dimension -->

{{$content = replaceRE `(?i)\!\[\[(.*)\.(jpg|png|jpeg|webp|svg)\|(\d+)\]\]` `<img src="/attachments/${1}.${2}" alt="${1}.${2}" style="width: ${3}px">` $content}}

<!-- image without dimension -->

{{$content = replaceRE `(?i)\!\[\[(.*)\.(jpg|png|jpeg|webp|svg)\]\]` `<img src="/attachments/${1}.${2}" alt="${1}.${2}">` $content}}

<!-- audio -->

{{$content = replaceRE `(?i)\!\[\[(.*)\.(mp3|m4a)\]\]` `<audio controls src="/attachments/${1}.${2}"></audio>` $content}}

<!-- video -->

{{$content = replaceRE `(?i)\!\[\[(.*)\.(pdf)\]\]` `<iframe src="/attachments/${1}.${2}" style="width: 100%; height: 60vh;"></iframe>` $content}}

<!-- pdf -->

{{$content = replaceRE `(?i)\!\[\[(.*)\.(mp4)\]\]` `<video controls="" src="/attachments/${1}.${2}"></video>` $content}}

H3 Front-end text processing

And now we’re using JavaScript to do some more wacky HTML text manipulation for reasons I won’t go into… it has to do with how Hugo generates HTML and how that breaks some of our stuff… you can read the code here.

H3 GitHub Action

A GitHub workflow now detects push on either the Hugo repo or our vault repo and builds the website, which then gets deployed on Netlify.

name: Deploy to site branch on private vault repository

on:

repository_dispatch: # triggered by vault push

types: [build_static]

push:

branches:

- hugo

jobs:

deploy:

runs-on: ubuntu-20.04

steps:

- name: checkout zettelkasten

uses: actions/checkout@v3

with:

repository: chaosarium/Zettelkasten

token: ${{ secrets.VAULT_PAT }}

path: zettelkasten

- name: checkout quartz plus

uses: actions/checkout@v3

with:

repository: chaosarium/quartz-plus

path: quartz-plus

- name: copy over config file

working-directory: ./quartz-plus

run: |

rm ./data/config.yaml

cp ../zettelkasten/config.yaml ./data/config.yaml

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: 3.9

- name: Install Python dependencies

run: |

python -m pip install --upgrade pip

pip install python_frontmatter==1.0.0

- name: Run Preprocess Script

working-directory: ./quartz-plus

run: python preprocess.py --source_path "../zettelkasten" --target_path "content" --target_attachment_path "static/attachments" > /dev/null

- name: Build Link Index

uses: jackyzha0/hugo-obsidian@v2.18

with:

index: true

input: quartz-plus/content

output: quartz-plus/assets/indices

root: quartz-plus

- name: Run Postprocess Script

working-directory: ./quartz-plus

run: sudo python postprocess.py

- name: Setup Hugo

uses: peaceiris/actions-hugo@v2

with:

hugo-version: '0.101.0'

extended: true

- name: Build

working-directory: ./quartz-plus

run: hugo --minify --destination "../public" --baseURL ${{ secrets.BASEURL }}

- name: Deploy

uses: peaceiris/actions-gh-pages@v3

with:

personal_token: ${{ secrets.VAULT_PAT }}

external_repository: chaosarium/Zettelkasten

publish_dir: ./public

publish_branch: site # deploying branch

H2 From zettelkasten to the chaosarium.xyz network

Getting Quartz-Plus to work set off a whole chain reaction as we constantly come up with ideas on what other things we can build under our new apex domain.

H3 Photo site

As announced in Introducing PhotoArchive & Launching Photography Site

#post #dev-log #automation #front-end

I always wanted a more comfortable way to post photos. There are many social media platforms, but...Introducing PhotoArchive & Launching Photography Site

H3 File repo

As discussed in Building A Quick and Simple and Stupid Static File Directory

#post

While updating this websit, we built a few other things under its domain, one of which is a file directory...Building A Quick and Simple and Stupid Static File Directory

H3 Subdomain listing

- https://photoarchive.chaosarium.xyz - photo site

- https://repo.chaosarium.xyz - file directory

- https://lambda.chaosarium.xyz - cloud function API

- https://go.chaosarium.xyz - redirector

- https://api.chaosarium.xyz - dedicated server API

- https://zettelkasten.chaosarium.xyz - public zettelkasten

- https://notes.chaosarium.xyz - alias for zettelkasten

- https://legacy.chaosarium.xyz - legacy website (will become offline at some point)